Tests done at sea

Last summer, the MarineGuardian project took a major step forward during a real-world research campaign at sea — combining artificial intelligence, marine science, and fisheries sustainability.

From 6 to 17 September, onboard the research vessel Miguel Oliver (a ship belonging to the General Secretariat for Fisheries of the Government of Spain) scientists and engineers from CSIC worked in the Cantabrian Northwest national fishing grounds (ICES divisions 8c and 9a), carrying out bottom-trawl operations under real fishing conditions with different selective gears.

During the campaign, the scientists deployed iObserver 2.0, an electronic observer system installed over the conveyor belt in the fishing sorting area which automatically takes pictures during fish separation. Each picture is analysed using an Deep Learning (DL) image recognition model and, for each individual:

- Identifies the species.

- Estimates fish length and weight.

It then combines the DL output of all pictures to generate a catch report for the entire haul.

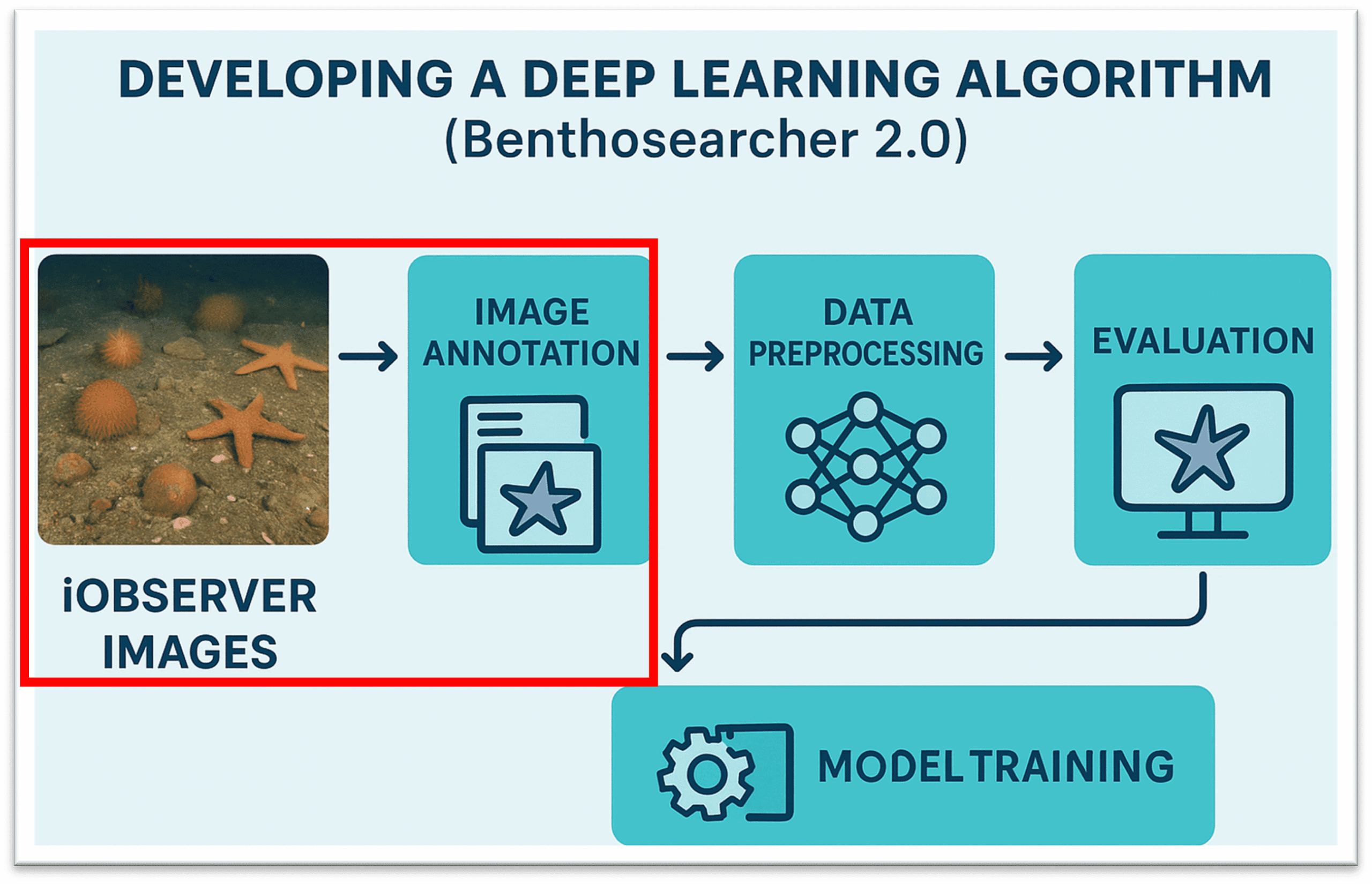

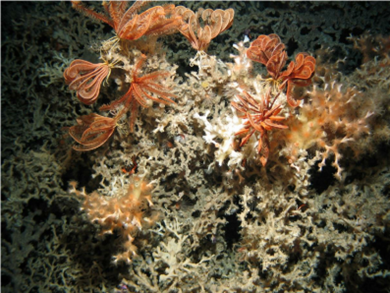

During the survey, we began developing BenthoSearcher 2.0, an advanced AI and computer vision tool, built based on the iObserver 2.0 hardware and designed to automatically detect and identify benthic species living on the seafloor. It will quantify the presence of PETS and VMEs even in complex fishing environments. When deployed on commercial vessels, the system will deliver near real-time maps that support more sustainable fishing, help avoid unwanted catches, and enhance marine habitat cartography.

Building the foundations for AI

A key objective of this trial was data collection and starting to generate a new dataset of benthic invertebrates images (by using the iObserver 2.0) that serve as indicators of VMEs (Vulnerable Marine Ecosystems) and PETS (Protected, Endangered, and Threatened Species), which will later be used to train and validate new AI algorithms to identify (and quantify) these species.

Hundreds of benthic invertebrates were carefully used for training of the AI by:

- Identifying them by experts,

- measuring and weighing them,

- photographing them from multiple angles and positions, to create a high-quality image dataset for training future AI models by maximising the visual variety of images.

Both individual specimens pictures and multiple specimens, with and without overlap were taken to enhance the algorithm robustness and recognition accuracy. In total, more than 1,700 training images were collected, covering emblematic species such as corals, sea pens and other sensitive benthic invertebrates.

Why does this matters ?

By enabling near real-time identification of sensitive marine habitats and species during fishing operations, tools like BenthoSearcher 2.0 can:

- support more sustainable fishing practices,

- help avoid unwanted catches,

- strengthen the protection of vulnerable ecosystems.

What’s next ?

The data collected during this campaign will now be used to train and validate new AI algorithms to identify (and quantify) these species which will serve as the base for the BenthoSearcher 2.0.